LLMs in Research: the Good, the Bad, and the Future

Introduction

In the realm of scientific research, a powerful tool has emerged that is capturing the attention of researchers: Large Language Models (LLMs). These models possess an ability to comprehend, generate, and analyze vast amounts of text. LLMs, such as OpenAI's GPT-4, have generated excitement due to their potential to advance knowledge and expedite the research process. However, it is also important to acknowledge the limitations and challenges associated with LLMs.

We recently began leveraging LLMs on the Enable Medicine Platform, and have just announced the first in what we anticipate to be a long series of such launches. In just the last few weeks, we were able to build a proof of concept that shows how we can use LLMs to help users simplify the process of research, discover data across our platform, and access general biological knowledge. In this post, we hope to highlight both the capabilities and boundaries of these language models and share our thoughts on their potential impact on scientific inquiry.

The Good

While the most popular conception of LLMs in the world tends to revolve around their ability to function as a text-based Q&A tool, their abilities are much broader and have many more applications. LLMs offer several notable strengths that make them well suited as a tool in the scientific research process.

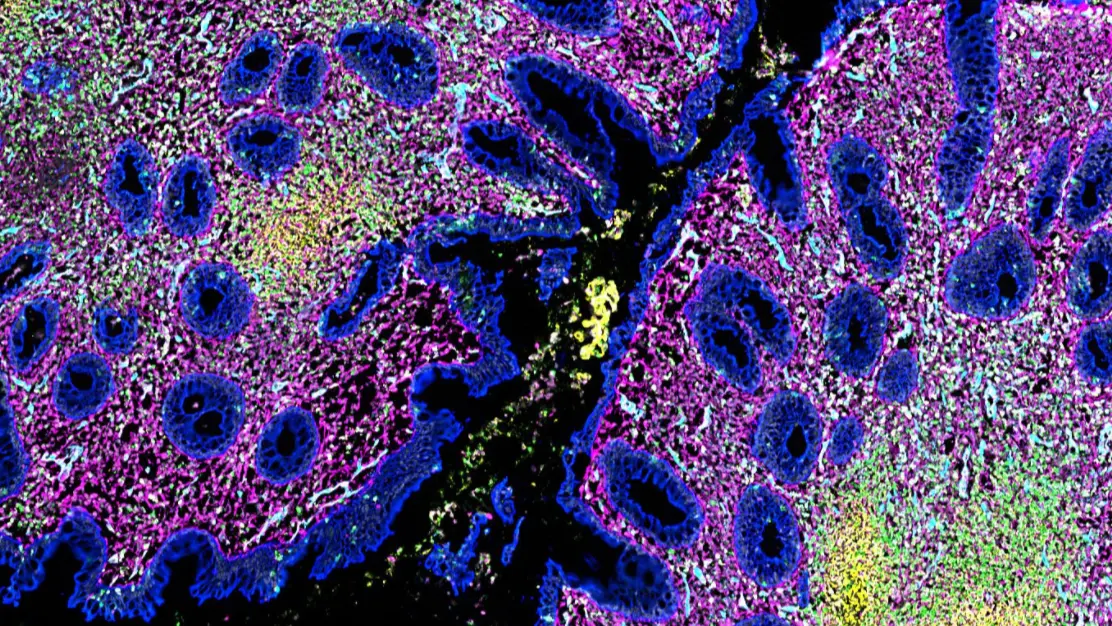

Firstly, their ability to analyze and interpret large volumes of complex data quickly and accurately is remarkable. This enables researchers to efficiently process extensive scientific literature and diverse datasets, gaining valuable insights and comprehensive overviews of existing knowledge. As an example, Claude (an LLM from Anthropic), was able to parse and answer specific questions about the entire text of the Great Gatsby in less than 30 seconds. Recent advances in multimodal AI have also shown these models’ capabilities to understand images in addition to text.

Moreover, LLMs have access to a vast knowledge set based on their training data, granting them a strong understanding of even advanced scientific concepts. While this knowledge may not always include the most up-to-date information, it serves as a valuable resource for researchers seeking foundational insights.

Finally, an advantage of LLMs is their flexibility. They can be directed to fine-tune their answers or produce multiple responses, providing researchers with options to explore different perspectives.

By leveraging these strengths, LLMs can empower researchers to navigate complex data, enhance their understanding of scientific concepts, and accelerate the research process.

The Bad

Despite their strengths, LLMs have several limitations that must be considered when using them in scientific research.

One of the main limitations is that an LLM is essentially an unembodied advisor, rather than a research assistant actually in the lab with you. While LLMs can provide valuable insights and predictions based on data, an isolated LLM without more complex tools (web browsing, real-time knowledge, etc.) cannot independently take actions or drive the research process forward. Therefore, it is important to view LLMs as a tool that can support and enhance the research process, rather than a full replacement for the expertise and intuition of human researchers.

Another limitation of LLMs is that they are heavily influenced by their task definition and context. While LLMs can generate insights and predictions based on the input provided to them, the quality and relevance of the input prompt, also known as prompt engineering, can significantly impact the performance of the model. Therefore, it is crucial to ensure that the prompt is well-crafted and representative of the research question being studied, with a focus on providing diverse and unbiased data. There is an emerging need for this skill, and we’ve learned from firsthand experience that even a small investment in prompt engineering can lead to outsized impacts.

It is also important to note that the quality of the training data used to train LLMs can have a significant impact on their performance. Potential biases and limitations in the training data used to develop the models can result in inaccurate or skewed predictions. To mitigate these biases, it is important to supply diverse and representative data to train LLMs. It is also important to note that LLMs are currently most proficient at text generation, which means they may not be as well suited for other types of workflows, such as big data analysis.

For all of these reasons and due to the inherit probabilistic nature of LLMs, verification and validation are crucial when using LLMs in scientific research. This can be done through various means, such as comparing LLM-generated results with existing research findings or conducting experiments to validate LLM-generated hypotheses. It's also important to note that not all LLMs are fine-tuned to biology, which is an essential consideration for biotech research (although work is happening in this space!). Therefore, LLM-generated results may not always be applicable or accurate for biomedical research and results must be carefully validated by an expert.

Finally, one practical limitation of integrating commercially-available models is their API response times. GPT-4 API responses regularly take 10+ seconds to complete, and with more complex workflows requiring multiple API calls, this quickly becomes prohibitively slow. However, as the technology becomes more widely available, we are optimistic that this limitation is more of a short-term issue.

The Future

The impact of LLMs in scientific research is just starting to be understood. Ongoing innovation in this field is progressing rapidly, and it is important for researchers to embrace and contribute to its advancement. Many of the current limitations of LLMs described earlier are expected to be mitigated both as the core technology improves and as more tooling is built around LLMs. For example, OpenAI’s recent work with plugins, notably Code Interpreter, extends LLM capabilities to include data analysis.

One exciting avenue is with the increasing interest in AI-powered agents, which are embodied LLMs that have access to custom tools that extend the action-taking capabilities of LLMs. Projects like AutoGPT and BabyAGI have already taken initial steps in this direction. Internally, we’ve also created tailored tools that grant agents knowledge of our internal data schemas and platform functionality.

Additionally, the limitation of the LLM’s knowledge can be addressed with the creation of fine-tuned models trained specifically on scientifically-relevant information. For example, Microsoft released BioGPT late last year, which was fine tuned for biomedical applications.

One additional hurdle to overcome in science is the connection between LLMs and computational resources. Modern-day scientific research often requires performing complex analyses, often requiring powerful hardware well outside the limits of a standard LLM. Bridging this gap and ensuring adequate computational infrastructure is a vital consideration for fully harnessing the potential of LLMs in scientific research.

In Conclusion…

At Enable Medicine, our vision is to have LLMs serve as true research assistants, augmenting researchers' capabilities and enabling groundbreaking discoveries. By overcoming current limitations, embracing innovation, and integrating LLMs into the research process, we can usher in a new era of discovery and productivity.

If you’re interested in seeing how we’ve already begun integrating LLMs into our biological research platform, read our blog post announcing our initial launch and sign up here!

.svg)

.svg)