Planning for Data Sharing - Building an Ecosystem for Open Science

What does it take to realize the dream of open science, where data is freely shared and where scientists can easily and transparently build on the work of others? In this post, I explore this question, discuss why simple data storage in catch-all repositories is not enough for open science to happen, and describe what a possible solution would encompass.

Most scientists I know are ardent supporters of the idea of open science and data sharing. There are so many benefits — larger and more robust datasets, less wasted effort, and, in theory, faster discovery and innovation. Now that more people and computational resources are connected through the internet, file sharing services, and the cloud, than ever before, naively, it would seem like we have all the tools necessary to fully share all the data.

Yet, many feel trepidation in the face of the NIH data sharing directive taking effect this year, which states that all NIH-funded research must have a plan to make the data publicly available. This is especially true in fields, such as spatial biology, where the datasets can be massive (some are in the hundreds of terabytes range). For labs that generate these large datasets, cloud storage can be an expensive proposition. Furthermore, without guidance on how long these datasets need to be publicly available, researchers are nervous that they may need to pay a price for generating large datasets indefinitely.

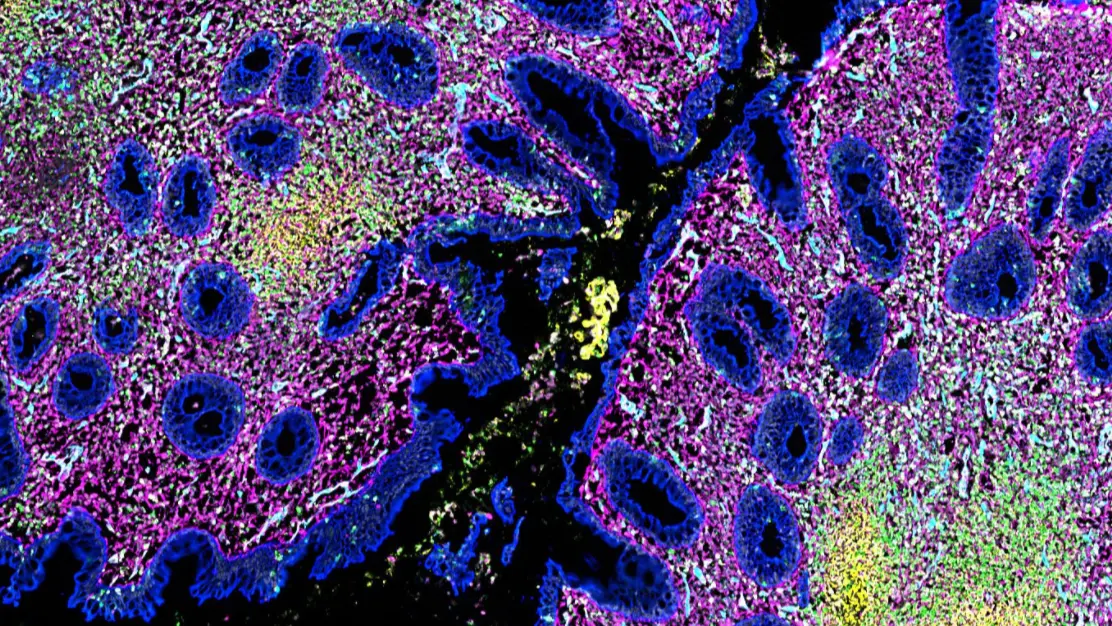

If the end goal is open science, though, cloud storage only solves part of the problem. Even if a lab could afford to throw all of its raw data into an AWS database S3 bucket indefinitely, thus allowing anyone with a sufficiently strong internet connection and large enough disk space to download the data, this might not be enough to make the data usable or accessible. For example, multiplexed immunofluorescence histology slides generate image data containing many fields of view that are stitched together. With relatively minimal effort, a researcher could upload a folder of the individual fields of view (i.e., the raw, unprocessed data). But doing so would, in many cases, render the data practically unusable: the downloader would need to assemble the image, which may or may not be mixed with tiles from other images; understand what the image represents, its source, and the conditions under which the sample was harvested (information that might not be easily associated with the files); and preprocess the image files (e.g., background subtraction, normalization, de-noising, etc.), in order to perform analysis. And all this assumes that the downloader has the hardware and software requirements to open the files in the first place. Most data uploaders would not be so intentionally lackadaisical, but in this exaggerated case, it is technically true that all of the data necessary to replicate the analysis was made available. The letter of the law was followed, if not the spirit.

Besides, even if the data were stored and labeled in a reasonable way, an Amazon S3 bucket is still relatively isolated — the data that is stored there is not integrated into an environment that makes it easy for users to search and aggregate from multiple sources. And if users aggregate the datasets themselves by downloading copies of the data, it only creates another siloed instance of the datasets. Furthermore, there is no guarantee that downstream analysis from the dataset is replicable, nor does it guarantee that the analysis can be implemented on other similar datasets.

I would argue that while data availability is necessary for open science, people also need to know that the datasets exist, where to access them, and how to use them for the dream of open science to be fully realized (see also FAIR standards).

Still, there have been numerous successes of data sharing that have promoted open science. By studying these examples, we might find some design rules to build the infrastructure necessary to enable true data sharing and open science for large multi-omic biological research.

One highly successful resource that has been built through the efforts of hundreds of labs around the world is the Protein Data Bank (PDB), which is used worldwide as a reference for protein structures. One reason for the PDB’s success is undoubtedly its relatively limited scope (it is only a repository for protein structure, and only distributes files in the standard PDBx/mmCIF format). This means that standards for submissions to it can be very strict, which makes it easy to guarantee that files are valid, openable, and thus usable. Given the widespread adoption of the PDB as a resource as well as the development of tools for converting non-standard files into PDBx/mmCIF data format, almost all programs that export crystal structure data will output the correct format, decreasing the burden on the laboratories generating these crystal structures.

Similar efforts to standardize clinical/medical data such as the Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM) are being undertaken, and these efforts have improved the integration of medical records across healthcare organizations. Adoption of the OMOP CDM allows researchers to query clinical data from multiple sources, and furthermore enables the development of analysis toolkits, because of the establishment of data structure standards.

But OMOP CDM isn’t perfect in that, while there are standard measurements and observations that are taken by physicians, there are also many yet-to-be-standardized, not-yet-developed, and/or non-standard tests and observations that can be taken. This means that the CDM vocabularies constantly need to be updated to take into account technology development, and furthermore, that there is potentially a lot of non-standard data that isn’t being captured by the CDM.

A similar problem plagues large multi-omics datasets: In these rapidly developing fields, it is difficult to define standards because there are so many unknowns about what information is important for analysis. Spatial biology data has the additional problem of being comprised of images rather than text or numbers, and though there are standard image data file formats (e.g., .png or .jpg for digital images in general, and OME-TIFF/OME-NGFF for biological imaging), images are difficult to integrate and parse for queries and analysis.

In general, using standard image file types only ensures that the image data can be viewed and analyzed by a set of open-source tools. It does not guarantee that the metadata that accompanies the image data is complete or can be read, labeled, or tagged for searchability, especially since there are very few standards for reporting the metadata tags themselves. Furthermore, querying the image content itself for specific features (i.e., content-based image retrieval), though an active area of research, remains a challenge for many biological specimens and structures, often due to context specificity that can interfere with standardization. On top of all this, researchers are actively and constantly discovering new features and characteristics that are relevant to different diseases or health conditions.

The difficulties of creating searchable spatial biology has meant that no real solutions and standards for data sharing have been developed. This also makes it an interesting and exciting technical challenge and opportunity.

As we’ve built the Enable Medicine Platform and thought about how to make it scalable and usable, we’ve come to realize that open science needs more than just a catch-all repository. The database needs to be indexed well and impose structure and standards, ensuring that the data is findable, usable, and modular (i.e. inter-operable). Furthermore, having a well-defined database structure can help ensure that the development of analysis pipelines are robust and repeatable not only on the original dataset, but also on other similar datasets.

Additionally, the standards that we develop must be flexible enough to evolve with the field of spatial biology. And because we expect changes to be made to the dataset structures, any analysis tool that we develop to operate on these datasets must also be either robust to these changes or version-controlled alongside the data to guarantee reproducibility.

To work towards fulfilling these requirements, we have needed to integrate indexed storage with reproducible data processing, essentially building a computing environment forspatial biology data. This has also required that we define standards for indexing and abstraction (i.e. metadata tagging) from image data, both of which will move us towards comprehensive automated image query and analysis pipelines. These are no easy tasks! But by taking this more holistic view of data sharing, we hope to build a truly healthy and transparent research ecosystem where the benefits of open science are fully realized.

.svg)

.svg)